AI

Fraud Detection Using AI in Banking: AI Model Explained

Recall the high-profile cases like the Danske Bank scandal or the legal challenges faced by T.D. Bank, Capital One, and Wells Fargo? A few years have passed, but it's still among the top 5 cases of fraud among financial institutions.

Back then, AI was in its infancy, barely tapping into its full potential. Looking back from today's perspective, we see the power of AI, now capable of pulling the rug under such schemes, identifying such manipulations with unmatched precision, and predicting criminals' moves before they make them.

Currently, nearly half of all financial companies, not just the giants, employ AI for detecting fraud and anomalies. For instance, Mastercard’s "Decision Intelligence Pro" exemplifies this shift, with its AI-driven insights offering to cut down fraud-related costs by up to 20%. Similarly, PayPal uses AI to effectively filter its 13 million daily transactions, reducing false positives and enhancing customer trust.

Inspired by the forefront of AI advancements, our team has evolved beyond merely integrating AI technologies to developing our own AI models and training them on datasets. One of the cases we would like to share with you is a PoC, where we developed the machine learning (ML) model trained for fraud detection using AI in banking. Functioning as a sophisticated filter, this model parses extensive transaction data to identify fraud patterns, learning from past data to anticipate and thwart fraudulent activities.

Old school fraud detection meets AI: What's the difference?

Back in the day, banks and financial institutions relied heavily on a combination of rule-based systems, statistical analysis, and extensive manual reviews to flag suspicious transactions. These rule-based systems operated on parameters carved out from past experiences and expert intuitions. For example, transactions might be flagged if they showed sudden spikes in activity, were made from unusual locations, or were inconsistent with a customer’s typical behavior.

However, while this method had a certain level of effectiveness, it struggled to keep pace with the ingenuity of fraudsters who were quick to learn how to navigate around these rules. Furthermore, these traditional methods were not only time-intensive but prone to significant false positives and misses. Human reviews required extensive labor and carried the risk of human error, while rule-based systems were rigid, often resulting in a tiresome and continuous game of catch-up with evolving fraud tactics.

The cost of sticking with outdated methods becomes apparent when we consider the scale of financial fraud. For instance, fintech companies experience a loss equivalent to 1.7% of their annual revenue due to fraudulent activities each year. Smaller firms face even greater challenges, with losses reaching up to 2.2% of their annual revenue.

In the 2024 State of Fraud Benchmark Report, startup fintech companies and large enterprise banks have also reported direct fraud losses exceeding $500K over the last year, highlighting the widespread and substantial financial impact of fraudulent activities across the board.

How do Machine Learning systems work?

At its core, machine learning is like teaching a computer to spot the difference between a cat and a dog, but in the context of financial transactions, it's about distinguishing between normal and suspicious activities, even in cases where detecting anomalies would usually require more than just a simple “if/else” scenario. AI development services, hence, have progressed from basic rule-based systems to more intelligent processing systems.

Traditional fraud detection methods, such as rule-based systems, follow a strict manual – relying on pre-established rules, which fail to adapt effectively to new and emerging fraud schemes. They are labor-intensive and often result in many false positives, where legitimate activities get flagged as suspicious.

ML, on the other hand, is more like an instinctual detective who can “smell” it when something’s wrong – it learns from patterns, adapts in real-time, and understands the context of transactions, making it highly effective at detecting fraud. Moreover, ML models can process and learn from vast data volumes at a high speed, allowing swift response to potential threats.

Additionally, due to their nuanced understanding, these models significantly reduce false positives, leading to fewer hurdles in customer transactions and enhancing their overall experience.

Fraud scenarios and fraud detection using AI in banking

Each company acknowledges the immense value of data, yet many struggle with tapping into its comprehensive potential. The challenge lies in ensuring data investments offer immediate, quantifiable returns while simultaneously building capabilities for harnessing future developments as the landscape of data technologies changes, new types of data emerge, and the data volumes keep rising. Here are common fraud scenarios, use cases for fraud detection using AI in banking, and how AI and ML understand them, dissect their patterns, and learn to anticipate such behaviors.

Scenario 1: Account takeover

An attacker uses phishing techniques to acquire a user’s login credentials for a mobile banking app. Once inside the account, the fraudster begins making high-value transactions and transferring funds to illicit accounts.

Traditional detection approach: Rule-based systems would flag transactions that exceed predetermined thresholds or are directed to suspicious accounts. However, if the fraudster mimics the user's typical transaction behavior, these red flags may not be raised.

ML detection approach: ML tools analyze the user’s historical transaction data and detect anomalies not just in transaction size, but in the speed of transactions, the time they are made, and the nature of the recipient accounts. AI and fintech sector cooperation offers vast opportunities for a more nuanced understanding of normal and abnormal human behavior. For instance, if the user’s typical behavior includes logging in from specific devices or locations and executing transactions at particular times of day, deviations from these patterns – such as logins at odd hours or transactions to previously unknown accounts – would immediately trigger alerts. This nuanced, behavior-based detection allows for a more accurate identification of account takeover attempts.

Scenario 2: Synthetic identity fraud

Fraudsters create new identities by combining real (e.g., stolen SSNs) and fake information to open new accounts or obtain credit. These synthetic identities might not directly trigger traditional checks, as components of the identity are genuine.

Traditional detection approach: Standard validation processes might not catch these identities since parts of the data are real and would pass basic authentication tests. The fraud becomes evident only after the credit is misused or the account is abandoned.

ML detection approach: Machine learning can identify correlations and patterns invisible to the human eye or traditional methods. By analyzing vast amounts of application data, ML models can detect subtle inconsistencies - such as the same phone number or address being used across applications with different names or the same SSN with varying personal information. These irregularities, when analyzed at scale, can be indicative of synthetic identity fraud. The ML system flags these applications for further review, preventing potential fraud before it fully manifests.

Scenario 3: Card not present (CNP) fraud

A fraudster obtains credit card details through various means (skimming, hacking, etc.) and uses this information to make unauthorized online purchases or transactions where the physical card is not required.

Traditional detection approach: Rules might flag transactions that are unusually large or made at suspect merchants. However, savvy fraudsters can keep transactions small and use information about the cardholder’s real purchases to avoid detection.

ML detection approach: ML goes beyond transaction amounts or merchants. It evaluates the digital footprint of each transaction, including the device used, its location, the time of the transaction, buying patterns, and even the types of items purchased. An ML model can learn the typical behavior of a cardholder and flag transactions that, while individually might seem benign, collectively raise suspicion due to anomalies in these broader patterns. For instance, a sudden flurry of small transactions late at night, from a new device, or in a different city from the cardholder’s typical transactions could be flagged for review.

How to build a model for fraud detection using AI in banking: General process outline

Our approach prioritizes swiftly tackling challenges by rapidly creating prototypes and establishing proof of concept (PoC). Our partners come to us with a range of AI challenges; some solutions are direct, while others are more complex. Time is often of the essence, either due to our partners' limited availability or the pressing nature of the issue, so we focus on quick prototyping to efficiently deliver solutions to our clients.

Let’s take, for example, the following case: a FinTech industry client approaches us. Their platform processes a high volume of financial transactions and requires protection against fraud. The customer already possesses a certain amount of data (in our case, to facilitate the process, let's assume the data has already been preprocessed). Our goal is not to use a generic model offered by a third-party provider but to assess a custom-built model specifically designed for their needs.

To get a clearer picture of how this works, let’s analyze the general way of implementing such an ML model. The process of creating a model for fraud detection using AI in accounting and banking has several parts.

- Data Gathering: This is the foundational step where transactional and behavioral data from customers is collected. The more data available, the better the machine learning system can learn to recognize patterns. Of course, the data will need to be prepared (preprocessed) to fit the format the model can use to form the connections (“weights”) that allow it to function.

- Enabling Pattern Recognition: The system is configured to pinpoint what patterns of activity are typical and which are potentially fraudulent. Factors like transaction location, size, frequency, and payment method are all considered.

- Model Training: With these patterns identified, the model is trained to discern between normal and suspicious transactions. During this training phase, the model learns which signals are strong fraud indicators.

- Model Deployment and Updating: After training, the model is deployed to monitor transactions in real time. However, it doesn't stop learning there. Continual updates are imperative to maintain its accuracy and to adjust to new emerging fraudulent strategies.

Let’s walk through the actual steps.

Step 1: Set up the development environment

As discussed earlier, we chose Google's Colab notebook which offers Python 3.9 support. Colab has impressive computing capabilities, which eliminate the need for high-end hardware. You can simply add Colab to your Google Drive, open it, and verify that the setup works perfectly by means of a simple print(“hello world”).

Step 2: Set up a Google Cloud Project

Upon creating your account, Google offers a $300 free credit that can be applied towards computing and storage costs. However, to use GCP resources beyond the free credit, ensure you have billing enabled. This is a crucial step to access the computing power necessary for training and deploying your models.

Step 3: Enable Vertex AI API

Vertex AI is a managed machine learning service provided by Google that combines data engineering, data science, and ML engineering workflows, all in a team-wide shared toolset.

What really makes us choose Vertex AI in this case is its comprehensive documentation and a considerably streamlined startup process. This platform expedites our development progress, ensuring that we can deliver the model quickly and accurately.

The process is not complicated:

- Navigate to the 'APIs & Services' dashboard within Google Cloud Console.

- Search for Vertex AI API and enable it.

Step 4: Setup Google Colab notebooks on your Google Drive

To set up Google Colab notebooks in your Google Drive, you'll first access Google Colab from your web browser, and create a new notebook using the "New Notebook" option. The notebook, by default, resides in Colab's temporary storage. To save it to your Google Drive, you'll use the File menu and select Save a copy in Drive.

Your notebook is now available in your Google Drive, typically in a folder named "Colab Notebooks". You can move and manage the notebook just like any other file in your drive.

If you want to open an existing notebook, find the file in Google Drive and open it with Google Colab. You can also share these Colab notebooks with others, just like a traditional Google Drive file.

Coding Part

Installation

Data pre-processing can be found in Colab notebook here.

The main sources used here are:

- GitHub Repository: Google provides a Python SDK for Vertex AI available on GitHub - googleapis/python-aiplatform. This SDK simplifies interacting with Vertex AI, making your coding work much easier.

- Dataset is taken from Kaggle named as Synthetic Financial Datasets For Fraud Detection.

The data used in the model will contain transaction type, amount, initial and post-transaction balance, as well as several other attributes like isFraud. Inside the simulation, the premise is that fraudulent activities occur by means of taking control of accounts and trying to empty the funds by transferring them to another account (then cashing out of the system). An illegal attempt in this dataset is an attempt to transfer more than a certain amount in a single transaction.

Configuration

Dataset configuration involves several key steps in preparing data for machine learning. This starts with data splitting, where the dataset is divided into training, validation, and test subsets. The next steps include feature selection and data preprocessing, where the most relevant attributes for the problem are chosen, and the data is normalized, scaled, and encoded to suit the algorithm. Improving the model's performance can be achieved with feature engineering, creating new or modifying existing features.

In scenarios involving classification tasks, it's common for classes to be imbalanced. Balancing the dataset is crucial to prevent model bias. Data augmentation might be applied in areas like image and audio processing to increase the size and diversity of the training set while maintaining data integrity. Lastly, ensuring the data is stored and formatted efficiently for the training process can make model training faster and more scalable.

In essence, a thoughtfully configured dataset bolsters machine learning model effectiveness and accuracy while optimizing data for learning and enhancing the model's predictive reliability.

In our case:

- Training Duration: The model training was completed in 12 minutes.

- Memory Usage: During the process, 6GB of RAM was utilized.

- Compute Instance: The training was carried out on a T4 GPU instance provided by Google Colab's runtime environment.

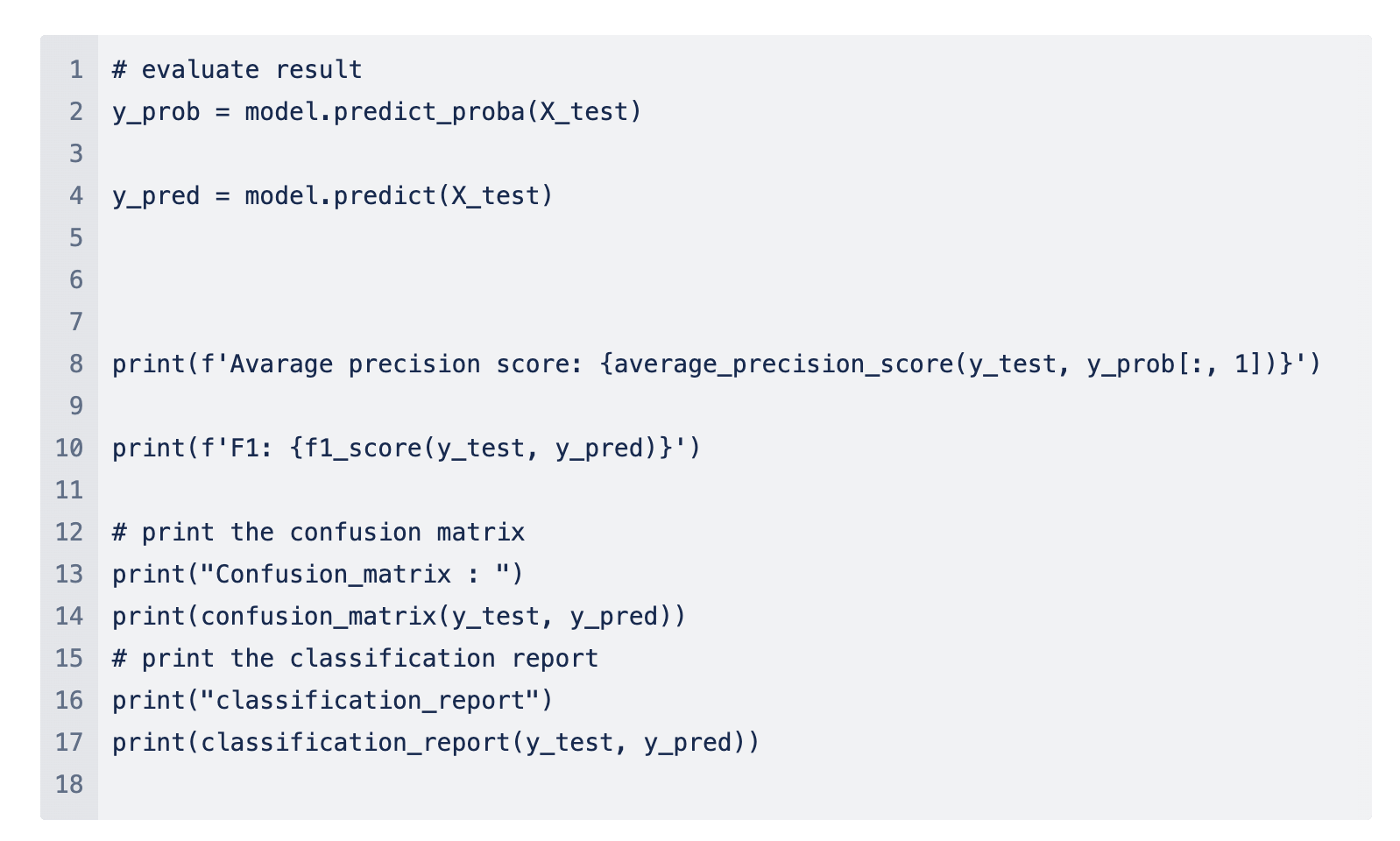

Classification Report

After we trained our model we can generate a classification report that shows how well the model performed after training. This report enables us to evaluate the effectiveness of the model in making predictions. By analyzing these metrics, we can understand the strengths and weaknesses of our model, such as how often it correctly identifies each class and how it handles imbalanced datasets.

Code:

Result:

A confusion matrix allows us to visualize the performance of the model by showing the counts of the true positive, true negative, false positive, and false negative predictions, thus providing insight into the model's accuracy, precision, recall, and other metrics. Since ML model deals with classes (in our case, the true or false value of isFraud), the matrix is structured in a way that makes it easy to see if the model is misclassifying the entries.

It’s interesting to see which feature is more valid for the ML algorithm.

Deploying the model

Deploying a model to Vertex AI involves several steps, which help transition your model from training to an actionable API endpoint that can serve predictions. Here's a breakdown of the process:

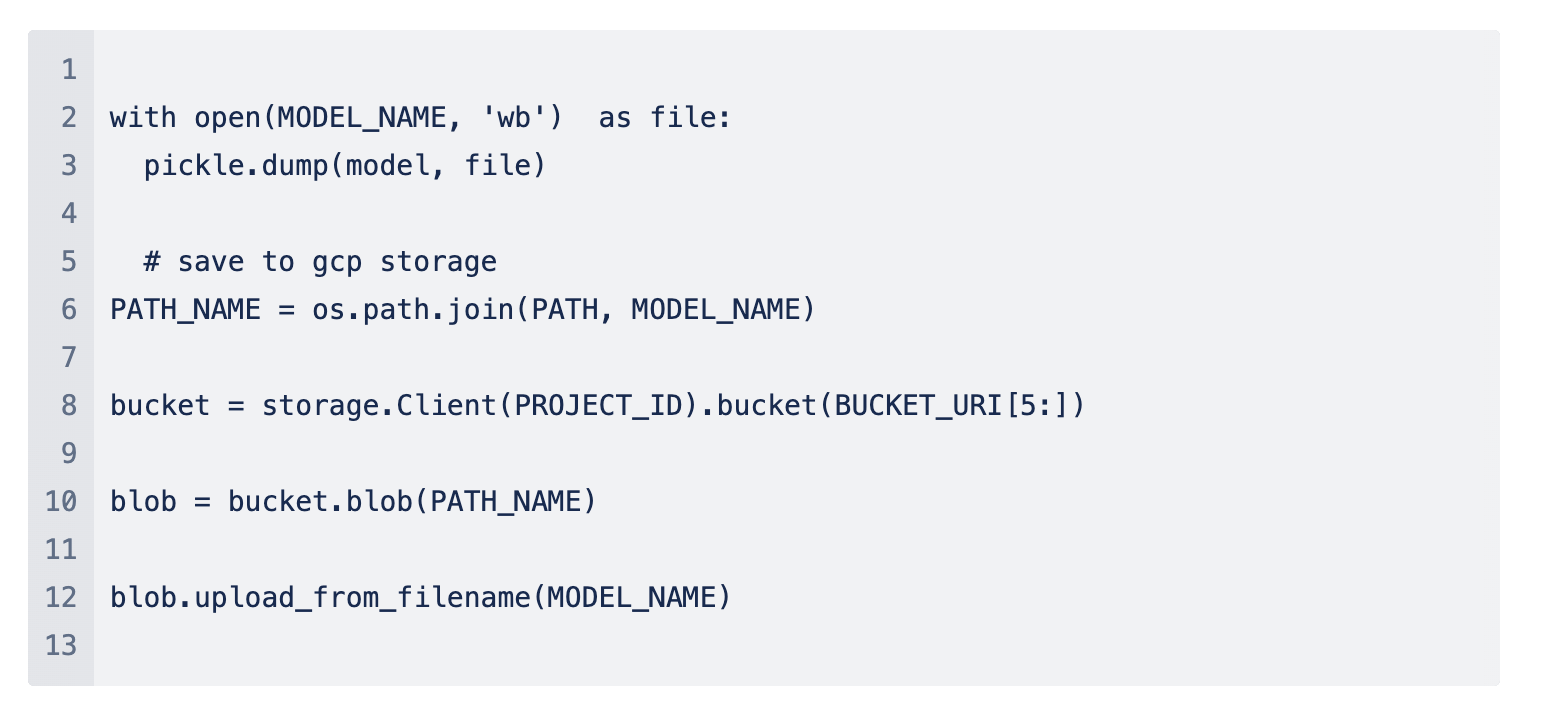

- Store model weights in a storage bucket

Model weights are the learned parameters of your machine learning model (commonly compared to neuron connections in the human brain, but existing in layers). After training, you need to save these weights to a Google Cloud Storage bucket so that they can be accessed by Vertex AI during deployment.

Push to storage:

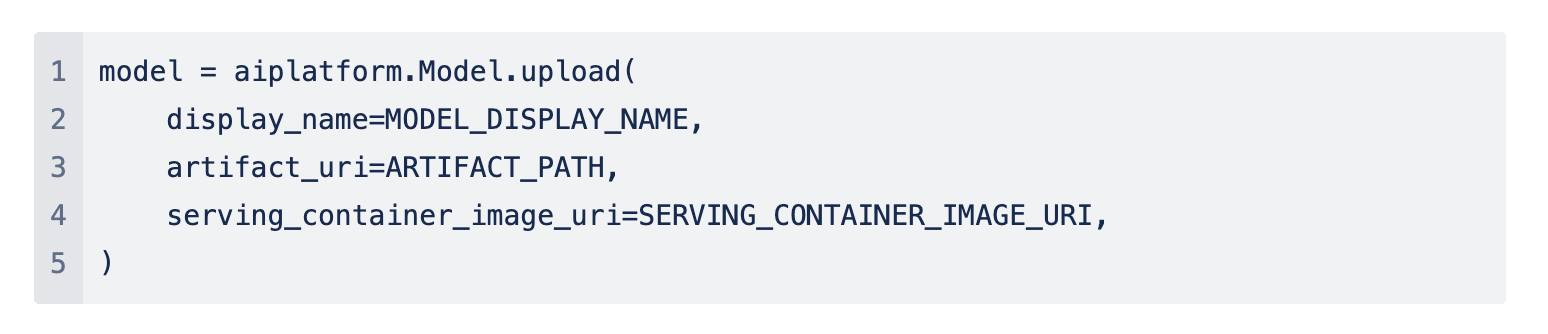

- Register the model

Registering the model involves creating a model resource in Vertex AI, which includes providing details about the model and pointing to where the weights are stored in the storage bucket.

- Create an endpoint

An endpoint in machine learning is a URL that provides an application access point to request predictions from a deployed model.

- Deploy the model to the endpoint

Once you have an endpoint, you can deploy your model to it. This step will provision the required infrastructure to serve predictions.

Following this process, Vertex AI will handle the deployment, and after a few minutes, your model will be live. You will be able to request predictions by sending data to the endpoint's URL.

Rather than doing all steps via the Vertex AI SDK, some steps will be done using the Vertex AI User Interface (UI). This approach provides a more visual and interactive experience which can simplify the process and make it more interesting. The UI displays various aspects and stages of deployment in an organized, visual manner, making it easier to understand and monitor.

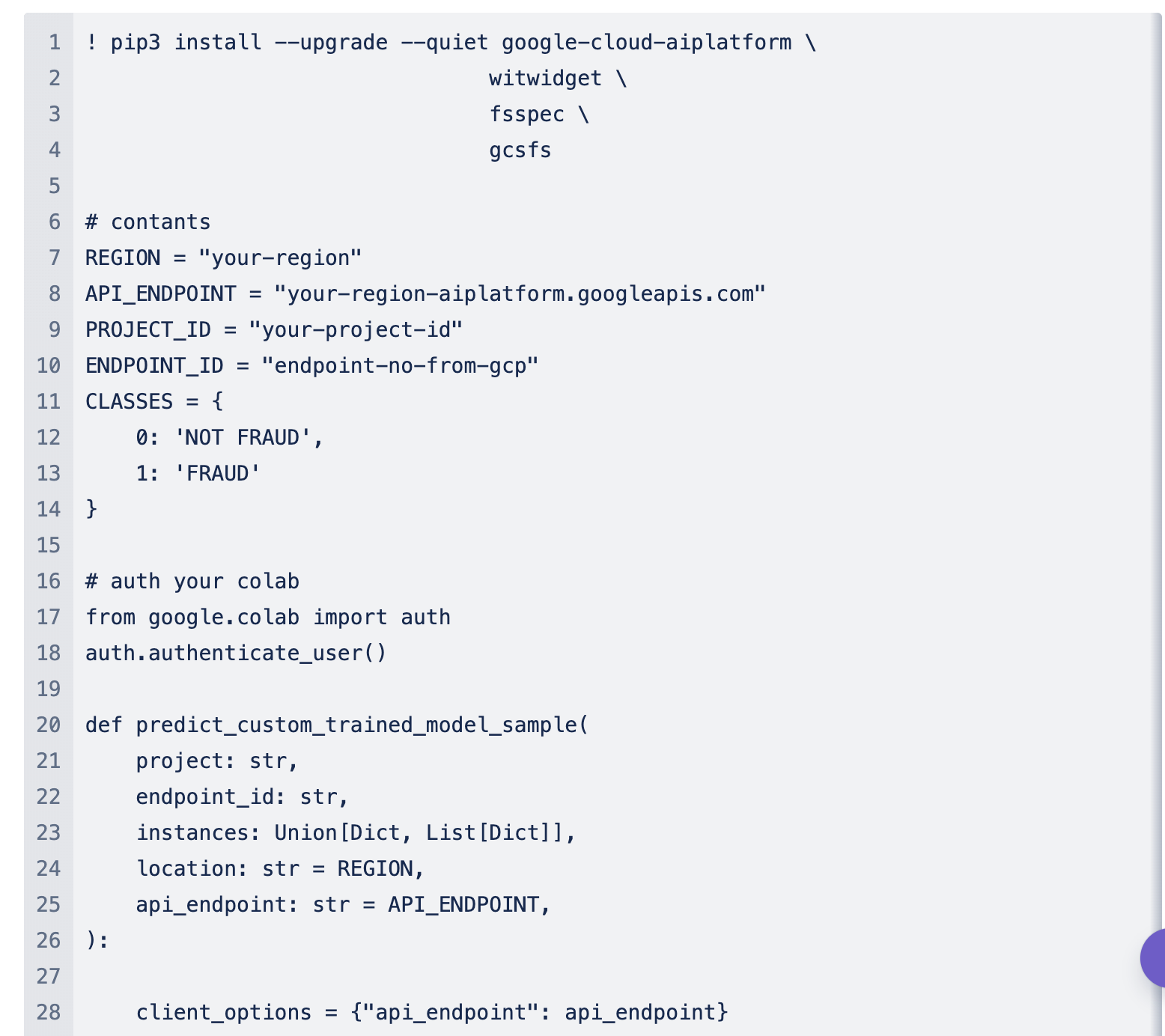

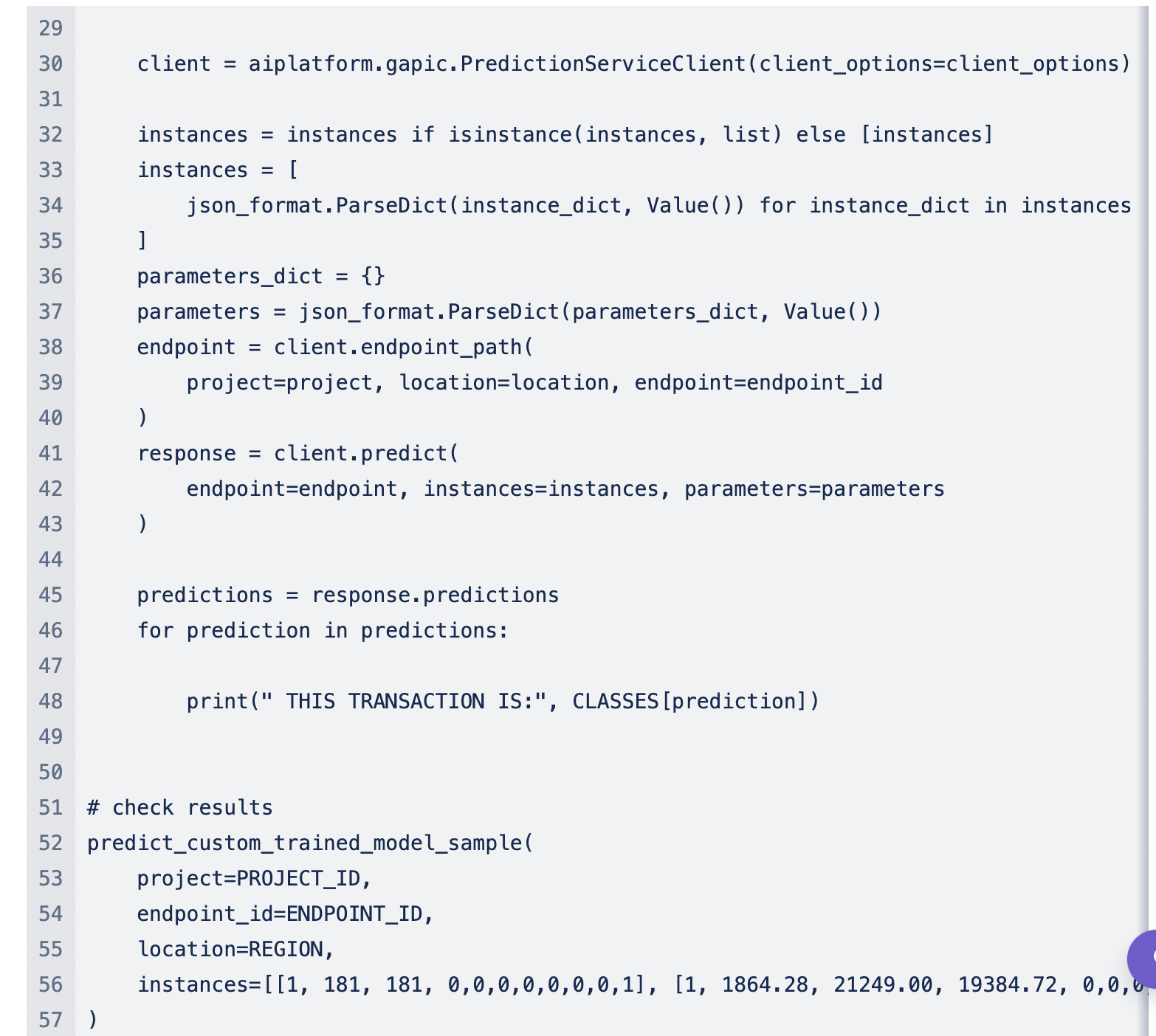

Testing the model

Testing the model involves evaluating its performance in identifying transaction fraud, where a prediction of "1" indicates fraudulent activity. This crucial phase ensures the model's reliability before it's implemented in a real-world application.

In this context, after training the model to recognize patterns indicative of fraud, we subject it to test data to see if it can accurately identify fraudulent transactions. A prediction of "1" signifies that the model suspects the transaction is fraudulent, based on the learning it has undergone.

What was achieved?

We swiftly moved from concept to prototyping and testing to detect fraudulent transactions, first building an AI model in Colab notebooks, before deploying it onto the Google Cloud Platform.

After successful prototyping, we deployed our model to the Google Cloud Platform (GCP), the advantages of which were evident in terms of speed and efficiency. The model is now ready for integration into our application, which marks a significant milestone in the development pipeline.

Once deployed, the model's real-world efficacy in identifying fraudulent transactions is continuously assessed via a direct, user-friendly interface, ensuring it remains an effective tool for financial security. This ability to evaluate and react is what transforms our project from a mere model to an active participant in safeguarding financial transactions.

Conclusion

So, by diving into our PoC development services, we see a glimpse of fraud detection using AI in banking. Without getting too technical, the best thing about this smart and adaptive system is that with its help we aren't just reacting to threats; we're predicting and outsmarting them. As it deals with more transactions, the system gets better at sniffing out the bad ones. Because we feed it real-time data, it doesn't just handle problems as they come but also sees patterns that could signal future threats.

Curious to discover more about our AI projects? Let us know here.